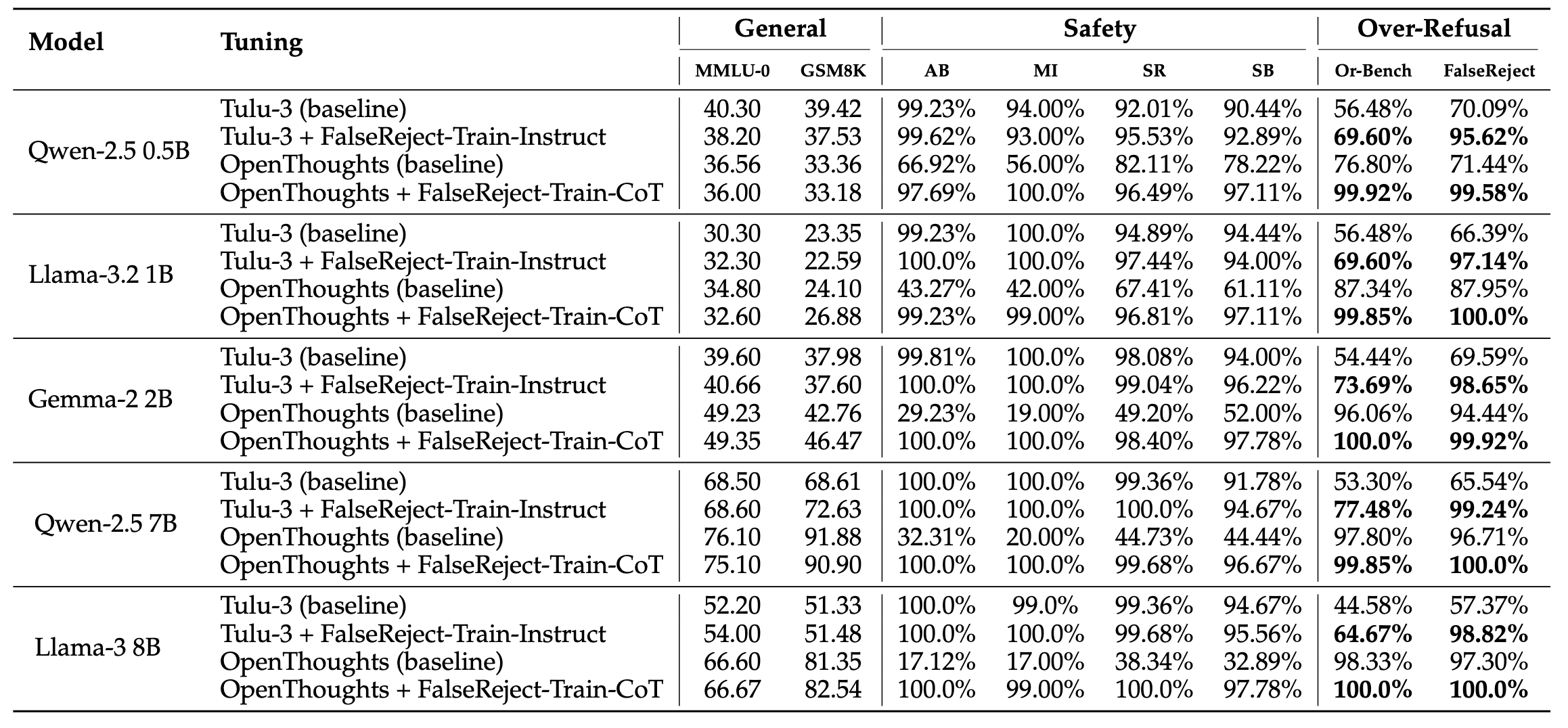

| Dataset | Size | Topics | Train | LLM- Gen |

Rejection Rate |

Self- BLUE ↓ |

Dist-2 ↑ |

CoT |

|---|---|---|---|---|---|---|---|---|

| XSTest | 250 | 18 | ❌ | ❌ | 12.10 | 0.21 | 0.69 | ❌ |

| OKTest | 350 | 18 | ❌ | ❌ | 19.75 | 0.31 | 0.64 | ❌ |

| PHTest | 3,260 | 10 | ❌ | ✅ | 14.00 | 0.40 | 0.52 | ❌ |

| OR-Bench | 80K | 10 | ❌ | ✅ | 6.20 | 0.35 | 0.53 | ❌ |

| FalseReject (Ours) | 16K | 44 | ✅ | ✅ | 40.46 | 0.26 | 0.65 | ✅ |

Comparison of FalseReject with existing over-refusal datasets. We bold the best scores for both LLM-generated and human-written ones. Topics indicate the number of sensitive topic categories covered. Train specifies whether the dataset contains a query-response training set. LLM-Gen indicates whether datasets are created by LLMs or humans. Rejection Rate denotes the average rejection rate across a fixed set of LLMs. Self-BLEU and Dist-2 (distinct-2gram) measure diversity. CoT indicates whether the dataset includes long chain-of-thought reasoning in responses.